I am happy to announce that three papers I worked on were accepted at the International Symposium of Mixed and Augmented Reality 2023 (ISMAR) in Sydney, Australia. All three are on different issues with the immersive space to think (IST) project, and are in addition to the TVCG paper on IST (helmed by Kylie Davidson), announced previously, that will also be presented at this conference.

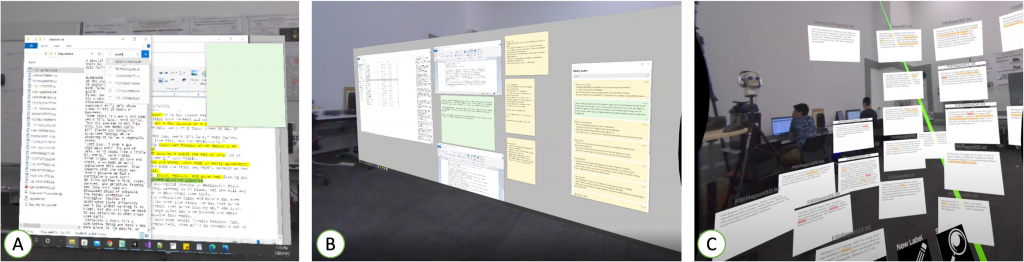

“Spaces to Think: A Comparison of Small, Large, and Immersive Displays for the Sensemaking Process” is the first paper, and was written by myself with the help of Kylie Davidson, Leonardo Pavanatto, Ibrahim Tahmid, Chris North, and Doug Bowman. The work centers around the Immersive Space to Think project and how it compares to traditional 2D displays as well as the original Space to Think concept. The paper abstract and presentation are below:

Analysts need to process large amounts of data in order to extract concepts, themes, and plans of action based upon their findings. Different display technologies offer varying levels of space and interaction methods that change the way users can process data using them. In a comparative study, we investigated how the use of single traditional monitor, a large, high-resolution two-dimensional monitor, and immersive three-dimensional space using the Immersive Space to Think approach impact the sensemaking process. We found that user satisfaction grows and frustration decreases as available space increases. We observed specific strategies users employ in the various conditions to assist with the processing of datasets. We also found an increased usage of spatial memory as space increased, which increases performance in artifact position recall tasks. In future systems supporting sensemaking, we recommend using display technologies that provide users with large amounts of space to organize information and analysis artifacts.

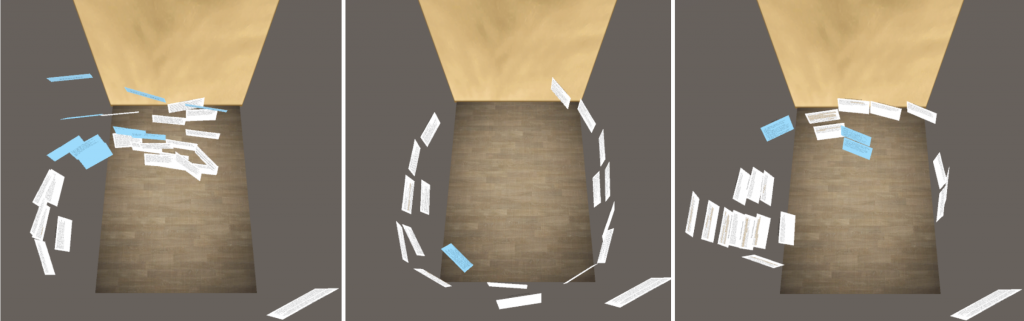

The second, helmed by my colleague Kylie Davidson, is entitled “Uncovering Best Practices in Immersive Space to Think” and covers what strategies are used in a multi-session sensemaking activity and how they compare to each other. She executed this work with the assistance of myself, Ibrahim Tahmid, Kirsten Whitley, Chris North, and Doug Bowman. Kylie presented this work in the Immersive Analytics session. The abstract is as follows:

As immersive analytics research becomes more popular, user studies have been aimed at evaluating the strategies and layouts of users’ sensemaking during a single focused analysis task. However, approaches to sensemaking strategies and layouts are likely to change as users become more familiar/proficient with the immersive analytics tool. In our work, we build upon an existing immersive analytics approach–Immersive Space to Think–to understand how schemas and strategies for sensemaking change across multiple analysis tasks. We conducted a user study with 14 participants who completed three different sensemaking tasks during three separate sessions. We found significant differences in the use of space and strategies for sensemaking across these sessions and correlations between participants’ strategies and the quality of their sensemaking. Using these findings, we propose guidelines for effective analysis approaches within immersive analytics systems for document-based sensemaking.

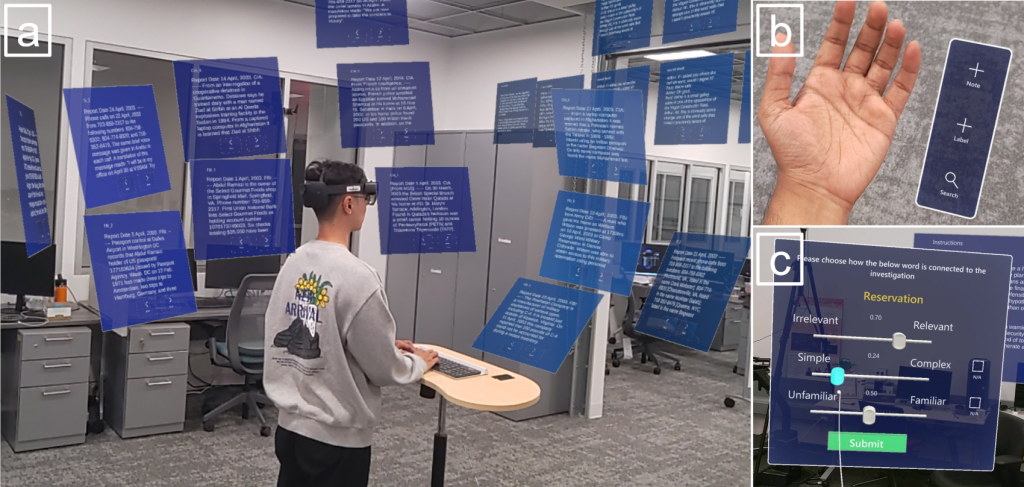

The last paper, helmed by Ibrahim Tahmid, centered around using eye-tracking as a predictive tool for the sensemaking process and was entitled “Evaluating the Feasibility of Predicting Information Relevance During Sensemaking with Eye Gaze Data.” He wrote this with the help of myself, Kylie Davidson, Kirsten Whitley, Chris North, and Doug Bowman. The abstract is as follows:

Eye gaze patterns vary based on reading purpose and complexity, and can provide insights into a reader’s perception of the content. We hypothesize that during a complex sensemaking task with many text-based documents, we will be able to use eye-tracking data to predict the importance of documents and words, which could be the basis for intelligent suggestions made by the system to an analyst. We introduce a novel eye-gaze metric called `GazeScore’ that predicts an analyst’s perception of the relevance of each document and word when they perform a sensemaking task. We conducted a user study to assess the effectiveness of this metric and found strong evidence that documents and words with high GazeScores are perceived as more relevant, while those with low GazeScores were considered less relevant. We explore potential real-time applications of this metric to facilitate immersive sensemaking tasks by offering relevant suggestions.

I want to thank all my co-authors for their marvelous work and contributions to the field. I had a great time in Sydney, and can’t wait for the next ISMAR in Seattle in 2024!